Introduction

Imagine a translator who converts your words into a different language for someone else to understand. Compiled languages work similarly. Instead of words, they translate your code into a special language that the computer's processor can understand. This special language, called machine code, is different for different computers (like different countries having different languages). Because of this, Go code written on your computer needs to be translated (compiled) before it can run on a different machine.

There's a good reason for this extra step! Go shines when it comes to working on many different computer systems (Windows, Mac, Linux, etc.). Unlike some other languages, you don't need a special program (interpreter) on each machine to run your Go code. Once you translate (compile) your Go program for a specific computer, it can run there directly. This makes it easy to move your program from one system to another and keeps your code the same for all of them. That's why Go is a popular choice for building programs that work well on many different computers.Let's take a deep look at how go comiler works!

How Go compiler work?

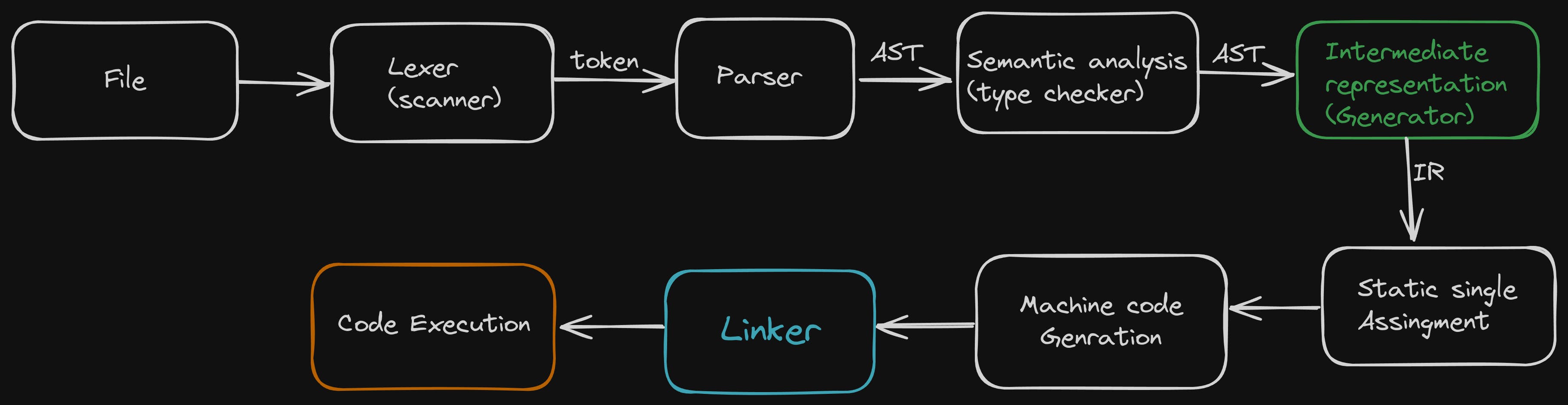

Unlike some interpreted languages, Go relies on a compiler to translate human-readable source code written in Go into machine code that a specific computer's processor can directly execute. This compilation process typically involves several stages.

Lexical analysis

The first step, known as lexical analysis or scanning, breaks down the source code into meaningful units called tokens.

These tokens can be keywords (like

if,for), operators (+,-,*), identifiers (user-defined names likeusername), literals (values like10,"hello"), or other language elements specific to Go.Here, the compiler acts like a meticulous sorter, categorizing each piece of code without getting lost in the whitespace or comments, which are ignored as they don't affect how the program runs.

Parsing

Following lexical analysis, the compiler performs parsing. This stage acts like a code architect, analyzing the structure of the code to build an Abstract Syntax Tree (AST).

The AST is a hierarchical representation of the program, similar to a family tree. It shows the relationships between different code elements like keywords, expressions, and statements.

Unlike a parse tree (which some compilers use internally), the AST discards unnecessary details, focusing on the core structure for better efficiency in later stages.

Semantic Ananlysis

After constructing the AST, the compiler performs semantic analysis. Imagine it as a code detective, meticulously examining the program's structure to ensure it follows the language's rules.

This stage goes beyond syntax (how the code is written) and delves into semantics (meaning). It verifies crucial aspects like:

Variable declaration and usage: Are variables declared before use? Do they have compatible types (e.g., adding an integer and a string is an error)?

Scope: Are variables only accessible within their intended regions of code?

Type consistency: Are operators used with compatible data types (e.g., dividing by zero is an error)?

Intermediate Representation

After successfully verifying the code's structure and meaning, the compiler generates an intermediate representation (IR). Think of IR as a blueprint for the program, independent of any specific computer architecture. It captures the program's core logic in a way that's easier for further optimizations.

Once the IR is established, the compiler can perform various optimizations to make the program run more efficiently. Here are some common optimization techniques:

Dead Code Elimination: The compiler identifies and removes code sections that are unreachable or don't contribute to the program's outcome, similar to removing unused pieces from a blueprint.

Function Call Inlining: For frequently called functions, the compiler can directly embed their code within the calling function, reducing overhead associated with function calls. It's like merging specific instructions from different parts of the blueprint into a single, streamlined step.

Devirtualization: In object-oriented programming, virtual functions allow for dynamic dispatch (choosing the correct function implementation at runtime). When possible, the compiler can devirtualize functions, determining the exact implementation needed upfront. This is like pre-selecting the specific tool needed for a task based on the blueprint, instead of figuring it out on the fly.

Escape Analysis: The compiler analyzes how variables are used and determines if they remain within a specific function's scope (don't "escape" to the heap memory). This information can be used for further optimizations like memory allocation strategies. It's like analyzing material usage in the blueprint to determine if specific pieces stay within the construction zone or need special handling.

By performing these optimizations, the compiler can create a more efficient version of the program from the platform-independent IR, ultimately leading to faster execution.

Static Single Assingment (SSA)

Once the compiler has a platform-independent blueprint of the program (the intermediate representation or IR), it can perform various optimizations to squeeze out the most performance. These optimizations are like fine-tuning the blueprint to ensure efficient construction:

Constant Folding: Imagine evaluating simple math problems like

2 + 3before even starting construction. Similarly, constant folding pre-computes expressions with known values at compile time, reducing the need for runtime calculations.Loop Optimization: Loops are crucial for repetitive tasks. The compiler can analyze loops and potentially unroll them (rewrite them with fewer iterations), improve loop conditions, or remove unnecessary loop iterations entirely. Think of it as streamlining repetitive tasks in the blueprint to minimize wasted steps.

Dead Code Elimination: Just like removing unused materials from a blueprint, dead code elimination identifies and removes code sections that don't contribute to the program's functionality. This frees up resources and improves execution speed.

These are just a few examples, and the Go compiler employs a range of optimization techniques to create the most efficient machine code possible from the IR.

Code Generation

After all the optimizations are applied, the compiler is ready to translate the fine-tuned IR into machine code, the language the target computer's processor understands directly. This stage acts like translating the blueprint into actual building instructions:

Machine Code vs. Assembly: In some cases, the compiler might generate assembly code as an intermediate step. Assembly code is a human-readable representation of machine code, using abbreviations and symbols instead of raw 0s and 1s. It provides a way for programmers to inspect the generated instructions if needed.

Direct Machine Code Generation: For some simpler architectures or when maximum efficiency is crucial, the compiler might directly produce machine code, skipping the assembly stage.

Ultimately, the choice between assembly and direct machine code generation depends on the specific compiler and target architecture. Regardless of the approach, this final translation step allows the program to be understood and executed by the target computer's processor.

Linking : Building the Final Package

While we've focused on compiling a single source file, real-world programs often consist of multiple source files and potentially rely on pre-written code in external libraries. This is where the linker comes in, acting like a construction foreman assembling the final executable:

Combining Machine Code: The linker takes the machine code generated for each source file and any relevant libraries. It then combines them into a single executable file, ensuring all the program's parts work together seamlessly.

Resolving References: Imagine building a house where different rooms are prefabricated. The linker acts similarly, resolving references between different parts of the program. It ensures that functions and variables called from one source file can be found and used correctly in another, even if they come from separate libraries.

The Final Binary: After successfully combining and resolving references, the linker produces a complete executable binary. This binary file contains all the instructions needed for the program to run on the target system.

By combining the machine code from various sources and ensuring everything works together, the linker plays a crucial role in creating a functional executable program.

Execution

With the linker's help, we now have a final executable – a self-contained program ready to run on the target machine. This executable acts like a blueprint translated into detailed instructions for the computer's workers (the processor and memory). When you execute the program, the following happens:

Loading the Executable: The operating system loads the executable into memory, making its instructions accessible to the processor.

Following the Instructions: The processor starts fetching and executing the instructions in the machine code, one by one. These instructions manipulate data in memory, perform calculations, and control the program's flow, ultimately executing the logic you meticulously crafted in the original Go source code.

Bonus points :

Automatic Memory Management with Garbage Collector :

One of Go's significant advantages is its automatic memory management. Unlike some languages where you need to manually allocate and free memory, Go employs a garbage collector that operates behind the scenes. Think of it as a diligent janitor who automatically cleans up unused memory in your program, preventing memory leaks and simplifying development.

A Collaborative Effort: Compiler and Runtime to manage garbage collection

This automatic memory management isn't magic. The Go compiler works closely with the runtime system to make garbage collection efficient. The compiler tracks how memory is used in your program, while the runtime monitors memory allocation and deallocation during execution. When the runtime identifies unused memory (objects no longer referenced by your code), the garbage collector steps in and reclaims it, ensuring your program has the resources it needs without manual intervention.

This collaborative approach between compiler and runtime frees you from the burden of explicit memory management, allowing you to focus on writing clean and efficient Go code.

Cross Compilation Errors : Deep Dive

While reading about how compilers work you probably noticed a point, i.e after generaration & optimization of IR (Intermediate representation) codes,which are platform independent, the compiler generates the machine code or assembly code depending upon the target architecture*.*

This means if i compile my code on a linux machine, which has x86-64 architecture, that compile code will not run on other system which has different architecture such as amd64, arm64, ppc64le, etc. and will through a format error :

./api : cannot execute binary file : Exec format error

This is because, the compiled binary consist of [Opcodes](en.wikipedia.org/wiki/Opcode#:~:text=In%20c.. or assembly instructions that are tied to specific architecture. That's why you got a format error. Since, for example, an amd64 cpu, can't interpret the binary of x86-64 instruction.

But in case of interpreted language like python,the interpreter takes care of opcodes or architecture specific instructions.

To run/ fix this error, you can run this command:

$ env GOOS=<Your operating system> GOARCH=<your cpu architecture> go build -o <binary name>

EG:

$ env GOOS=LINUX GOARCH=arm64 go build -o api-arm64

To find out your GOARCH, GOOS, create a main.go file, import the runtime pacakge and get it printed using fmt command like this :

package main

import(

"fmt"

"runtime"

)

func main(){

fmt.Println(runtime.GOOS)

fmt.Println(runtime.GOARCH)

}

To find out the architecture that go supports run this command :

$ go tool dist list

To find out linux specific :

$ go tool dist list | grep linux

Bonus tip :

For Production of your Go project, you can use a pacakge called Go releaser , For creating binaries for different Architecture